Snowflake (NYSE: SNOW), the Data Cloud company, today announced

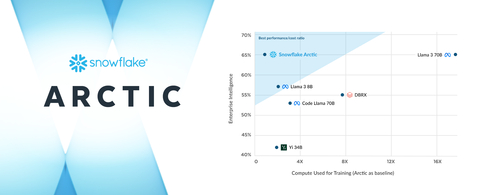

Snowflake Arctic, a state-of-the-art large language model (LLM)

uniquely designed to be the most open, enterprise-grade LLM on the

market. With its unique Mixture-of-Experts (MoE) architecture,

Arctic delivers top-tier intelligence with unparalleled efficiency

at scale. It is optimized for complex enterprise workloads, topping

several industry benchmarks across SQL code generation, instruction

following, and more. In addition, Snowflake is releasing Arctic’s

weights under an Apache 2.0 license and details of the research

leading to how it was trained, setting a new openness standard for

enterprise AI technology. The Snowflake Arctic LLM is a part of the

Snowflake Arctic model family, a family of models built by

Snowflake that also include the best practical text-embedding

models for retrieval use cases.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20240424761970/en/

Snowflake Arctic adds truly open large

language model with unmatched intelligence and efficiency to the

Snowflake Arctic model family (Graphic: Business Wire)

“This is a watershed moment for Snowflake, with our AI research

team innovating at the forefront of AI,” said Sridhar Ramaswamy,

CEO, Snowflake. “By delivering industry-leading intelligence and

efficiency in a truly open way to the AI community, we are

furthering the frontiers of what open source AI can do. Our

research with Arctic will significantly enhance our capability to

deliver reliable, efficient AI to our customers.”

Arctic Breaks Ground With Truly Open, Widely Available

Collaboration

According to a recent report by Forrester, approximately 46

percent of global enterprise AI decision-makers noted that they are

leveraging existing open source LLMs to adopt generative AI as a

part of their organization’s AI strategy.1

With Snowflake as the data foundation to more than 9,400 companies

and organizations around the world2, it is

empowering all users to leverage their data with industry-leading

open LLMs, while offering them flexibility and choice with what

models they work with.

Now with the launch of Arctic, Snowflake is delivering a

powerful, truly open model with an Apache 2.0 license that permits

ungated personal, research, and commercial use. Taking it one step

further, Snowflake also provides code templates, alongside flexible

inference and training options so users can quickly get started

with deploying and customizing Arctic using their preferred

frameworks. These will include NVIDIA NIM with NVIDIA

TensorRT-LLM, vLLM, and Hugging Face. For

immediate use, Arctic is available for serverless inference in

Snowflake Cortex, Snowflake’s fully managed service that

offers machine learning and AI solutions in the Data Cloud. It will

also be available on Amazon Web Services (AWS), alongside

other model gardens and catalogs, which will include Hugging

Face, Lamini, Microsoft Azure, NVIDIA API

catalog, Perplexity, Together AI, and more.

Arctic Provides Top-Tier Intelligence with Leading

Resource-Efficiency

Snowflake's AI research team, which includes a unique

composition of industry-leading researchers and system engineers,

took less than three months and spent roughly one-eighth of the

training cost of similar models when building Arctic. Trained using

Amazon Elastic Compute Cloud (Amazon EC2) P5 instances, Snowflake

is setting a new baseline for how fast state-of-the-art open,

enterprise-grade models can be trained, ultimately enabling users

to create cost-efficient custom models at scale.

As a part of this strategic effort, Arctic’s differentiated MoE

design improves both training systems and model performance, with a

meticulously designed data composition focused on enterprise needs.

Arctic also delivers high-quality results, activating 17 out of 480

billion parameters at a time to achieve industry-leading quality

with unprecedented token efficiency. In an efficiency breakthrough,

Arctic activates roughly 50 percent less parameters than DBRX, and

75 percent less than Llama 3 70B during inference or training. In

addition, it outperforms leading open models including DBRX,

Mixtral-8x7B, and more in coding (HumanEval+, MBPP+) and SQL

generation (Spider), while simultaneously providing leading

performance in general language understanding (MMLU).

Snowflake Continues to Accelerate AI Innovation for All

Users

Snowflake continues to provide enterprises with the data

foundation and cutting-edge AI building blocks they need to create

powerful AI and machine learning apps with their enterprise data.

When accessed in Snowflake Cortex, Arctic will accelerate

customers’ ability to build production-grade AI apps at scale,

within the security and governance perimeter of the Data Cloud.

In addition to the Arctic LLM, the Snowflake Arctic family of

models also includes the recently announced Arctic embed, a family

of state-of-the-art text embedding models available to the open

source community under an Apache 2.0 license. The family of five

models are available on Hugging Face for immediate use and will

soon be available as part of the Snowflake Cortex embed function

(in private preview). These embedding models are optimized to

deliver leading retrieval performance at roughly a third of the

size of comparable models, giving organizations a powerful and

cost-effective solution when combining proprietary datasets with

LLMs as part of a Retrieval Augmented Generation or semantic search

service.

Snowflake also prioritizes giving customers access to the newest

and most powerful LLMs in the Data Cloud, including the recent

additions of Reka and Mistral AI’s models. Moreover, Snowflake

recently announced an expanded partnership with NVIDIA to continue

its AI innovation, bringing together the full-stack NVIDIA

accelerated platform with Snowflake’s Data Cloud to deliver a

secure and formidable combination of infrastructure and compute

capabilities to unlock AI productivity. Snowflake Ventures has also

recently invested in Landing AI, Mistral AI, Reka, and more to

further Snowflake’s commitment to helping customers create value

from their enterprise data with LLMs and AI.

Comments On the News from AI Experts

“Snowflake Arctic is poised to drive significant outcomes that

extend our strategic partnership, driving AI access,

democratization, and innovation for all,” said Yoav Shoham,

Co-Founder and Co-CEO, AI21 Labs. “We are excited to see

Snowflake help enterprises harness the power of open source models,

as we did with our recent release of Jamba — the first

production-grade Mamba-based Transformer-SSM model. Snowflake’s

continued AI investment is an important factor in our choosing to

build on the Data Cloud, and we’re looking forward to continuing to

create increased value for our joint customers.”

“Snowflake and AWS are aligned in the belief that generative AI

will transform virtually every customer experience we know,” said

David Brown, Vice President Compute and Networking, AWS.

"With AWS, Snowflake was able to customize its infrastructure to

accelerate time-to-market for training Snowflake Arctic. Using

Amazon EC2 P5 instances with Snowflake’s efficient training system

and model architecture co-design, Snowflake was able to quickly

develop and deliver a new, enterprise-grade model to customers. And

with plans to make Snowflake Arctic available on AWS, customers

will have greater choice to leverage powerful AI technology to

accelerate their transformation.”

“As the pace of AI continues to accelerate, Snowflake has

cemented itself as an AI innovator with the launch of Snowflake

Arctic,” said Shishir Mehrotra, Co-Founder and CEO, Coda.

“Our innovation and design principles are in-line with Snowflake’s

forward-thinking approach to AI and beyond, and we’re excited to be

a partner on this journey of transforming everyday apps and

workflows through AI.”

“There has been a massive wave of open-source AI in the past few

months,” said Clement Delangue, CEO and Co-Founder, Hugging

Face. “We're excited to see Snowflake contributing

significantly with this release not only of the model with an

Apache 2.0 license but also with details on how it was trained. It

gives the necessary transparency and control for enterprises to

build AI and for the field as a whole to break new grounds.”

“Lamini's vision is to democratize AI, empowering everyone to

build their own superintelligence. We believe the future of

enterprise AI is to build on the foundations of powerful open

models and open collaboration," said Sharon Zhou, Co-Founder and

CEO, Lamini. “Snowflake Arctic is important to supporting

that AI future. We are excited to tune and customize Arctic for

highly accurate LLMs, optimizing for control, safety, and

resilience to a dynamic AI ecosystem.”

“Community contributions are key in unlocking AI innovation and

creating value for everyone,” said Andrew Ng, CEO, Landing

AI. “Snowflake’s open source release of Arctic is an exciting

step for making cutting-edge models available to everyone to

fine-tune, evaluate and innovate on.”

“We’re pleased to increase enterprise customer choice in the

rapidly evolving AI landscape by bringing the robust capabilities

of Snowflake’s new LLM model Arctic to the Microsoft Azure AI model

catalog,” said Eric Boyd, Corporate Vice President, Azure AI

Platform, Microsoft. “Our collaboration with Snowflake is an

example of our commitment to driving open innovation and expanding

the boundaries of what AI can accomplish.”

“The continued advancement — and healthy competition between —

open source AI models is pivotal not only to the success of

Perplexity, but the future of democratizing generative AI for all,”

said Aravind Srinivas, Co-Founder and CEO, Perplexity. “We

look forward to experimenting with Snowflake Arctic to customize it

for our product, ultimately generating even greater value for our

end users.”

“Snowflake and Reka are committed to getting AI into the hands

of every user, regardless of their technical expertise, to drive

business outcomes faster,” said Dani Yogatama, Co-Founder and CEO,

Reka. “With the launch of Snowflake Arctic, Snowflake is

furthering this vision by putting world-class truly-open large

language models at users’ fingertips.”

“As an organization at the forefront of open source AI research,

models, and datasets, we’re thrilled to witness the launch of

Snowflake Arctic,” said Vipul Ved Prakash, Co-Founder and CEO,

Together AI. “Advancements across the open source AI

landscape benefit the entire ecosystem, and empower developers and

researchers across the globe to deploy impactful generative AI

models.”

Learn More:

- Register for Snowflake Data Cloud Summit 2024, June 3-6, 2024

in San Francisco, to get the latest on Snowflake’s AI

announcements, and check out Snowflake Dev Day on June 6, 2024 to

see these innovations in action.

- Users can go to Hugging Face to directly download Snowflake

Arctic and use Snowflake’s Github repo for inference and

fine-tuning recipes.

- Get more information and additional resources on Snowflake

Arctic, here.

- Dig into how the Snowflake AI research team trained Snowflake

Arctic in this blog.

- See how organizations are bringing generative AI and LLMs to

their enterprise data in this video.

- Stay on top of the latest news and announcements from Snowflake

on LinkedIn and Twitter.

1The State of Generative AI, Forrester

Research Inc., January 26, 2024.

2 As of January 31, 2024.

About Snowflake

Snowflake makes enterprise AI easy, efficient, and trusted.

Thousands of companies around the globe, including hundreds of the

world’s largest, use Snowflake’s Data Cloud to share data, build AI

and machine learning applications, and power their business. The

era of enterprise AI is here. Learn more at snowflake.com (NYSE:

SNOW).

View source

version on businesswire.com: https://www.businesswire.com/news/home/20240424761970/en/

Kaitlyn Hopkins

Senior Product PR Lead, Snowflake

press@snowflake.com

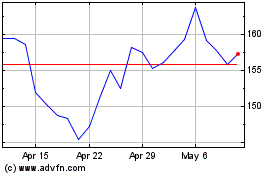

Snowflake (NYSE:SNOW)

Historical Stock Chart

From Mar 2024 to Apr 2024

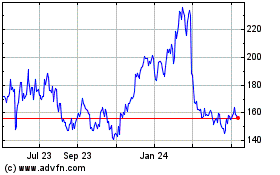

Snowflake (NYSE:SNOW)

Historical Stock Chart

From Apr 2023 to Apr 2024