New Custom Model Import capability lets

customers easily bring their proprietary models to Amazon Bedrock

so they can take advantage of its powerful capabilities

New Model Evaluation capability makes it

easier and faster for customers to take advantage of the widest

selection of fully managed models including the new RAG-optimized

Titan Embeddings V2 and the latest models from Cohere and

Meta

Guardrails for Amazon Bedrock provides

customers with best-in-class technology to help them effectively

implement safeguards tailored to their application needs and

aligned with their responsible AI policies

Tens of thousands of customers and partners,

including adidas, ADP, Aha!, Amazon.com, Bridgewater Associates,

Choice Hotels, Clariant, Delta Air Lines, Dentsu, FOX Corporation,

GoDaddy, Hugging Face, Infor, Intuit, Kone, KT Corporation,

LexisNexis Legal & Professional, Lonely Planet, Netsmart, New

York Stock Exchange, Pearson, Pfizer, PGA TOUR, Perplexity AI,

Ricoh USA, Rocket Mortgage, Ryanair, Salesforce, Siemens, Thomson

Reuters, Toyota, Tui, United Airlines, and others are using Amazon

Bedrock to build and deploy generative AI applications

Amazon Web Services, Inc. (AWS), an Amazon.com, Inc. company

(NASDAQ: AMZN), today announced new Amazon Bedrock innovations that

offer customers the easiest, fastest, and most secure way to

develop advanced generative artificial intelligence (AI)

applications and experiences. Tens of thousands of customers have

already selected Amazon Bedrock as the foundation for their

generative AI strategy because it gives them access to the broadest

selection of leading foundation models (FMs) from AI21 Labs,

Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon,

along with the capabilities and enterprise security they need to

quickly build and deploy generative AI applications. Amazon

Bedrock’s powerful models are offered as a fully managed service so

customers do not need to worry about the underlying infrastructure,

ensuring their applications operate with seamless deployment,

scalability, and continuous optimization. Today’s announcements

empower customers to run their own fully managed models on Amazon

Bedrock, make it simple to find the best model for their use case,

make it easier to apply safeguards to generative AI applications,

and provide them even more model choice. To get started with Amazon

Bedrock, visit aws.amazon.com/bedrock.

Organizations across all industries, from the world’s fastest

growing startups to the most security-conscious enterprises and

government institutions, are using Amazon Bedrock to spark

innovation, increase productivity, and create new end-user

experiences. The New York Stock Exchange (NYSE) is leveraging

Amazon Bedrock's choice of FMs and cutting-edge AI generative

capabilities across several use cases, including the processing of

thousands of pages of regulations to provide answers in

easy-to-understand language. Ryanair, Europe’s largest airline, is

using Amazon Bedrock to help its crew to instantly find answers to

questions about country-specific regulations or extract summaries

from manuals, to keep their passengers moving. Netsmart, a

technology provider that specializes in designing, building, and

delivering electronic health records (EHRs) for community-based

care organizations, is working to enhance the clinical

documentation experience for healthcare providers. They aim to

reduce the time spent managing the health records of individuals by

up to 50% with their generative AI automation tool built on Amazon

Bedrock. This will lead Netsmart clients to speed-up patient

reimbursement submissions while also improving patient care.

“Amazon Bedrock is experiencing explosive growth, with tens of

thousands of organizations of all sizes and across all industries

choosing it as the foundation for their generative AI strategy

because they can use it to move from experimentation to production

more quickly and easily than anywhere else,” said Dr. Swami

Sivasubramanian, vice president of AI and Data at AWS. “Customers

are excited by Amazon Bedrock because it offers enterprise-grade

security and privacy, wide choice of leading foundation models, and

the easiest way to build generative AI applications. With today’s

announcements, we continue to innovate rapidly for our customers by

doubling-down on our commitment to provide them with the most

comprehensive set of capabilities and choice of industry-leading

models, further democratizing generative AI innovation at

scale.”

New Custom Model Import capability helps organizations bring

their own customized models to Amazon Bedrock, reducing operational

overhead and accelerating application development

In addition to having access to the world’s most powerful models

on Amazon Bedrock–from AI21 Labs, Amazon, Anthropic, and Cohere, to

Meta, Mistral AI, and Stability AI–customers across healthcare,

financial services, and other industries are increasingly putting

their own data to work by customizing publicly available models for

their domain-specific use cases. When organizations want to build

these models using their proprietary data, they typically turn to

services like Amazon SageMaker, which offers the best-in-class

training capabilities to train a model from scratch or perform

advanced customization on publicly available models such as Llama,

Mistral, and Flan-T5. Since launching 2017, Amazon SageMaker has

become the place where the world’s high-performing FMs–including

Falcon 180, the largest publicly available model to date–are built

and trained. Customers also want to use all of Amazon Bedrock’s

advanced, built-in generative AI tools such as Knowledge Bases,

Guardrails, Agents, and Model Evaluation, with their customized

models, without having to develop all these capabilities

themselves.

With Amazon Bedrock Custom Model Import, organizations can now

import and access their own custom models as a fully managed

application programming interface (API) in Amazon Bedrock, giving

them unprecedented choice when building generative AI applications.

In just a few clicks, customers can take models that they

customized on Amazon SageMaker, or other tools, and easily add them

to Amazon Bedrock. Once through an automated validation process,

they can seamlessly access their custom model, like any other on

Amazon Bedrock, getting all the same benefits that they get

today–including seamless scalability and powerful capabilities to

safeguard their applications, adhering to responsible AI

principles, the ability to expand a model’s knowledge base with

retrieval augmented generation (RAG), easily creating agents to

complete multi-step tasks, and carrying out fine-tuning to keep

teaching and refining models–without needing to manage the

underlying infrastructure. With this new capability, AWS makes it

easy for organizations to choose a combination of Amazon Bedrock

models and their own custom models via the same API. Today, Amazon

Bedrock Custom Model Import is available in preview and supports

three of the most popular open model architectures, Flan-T5, Llama,

and Mistral and with plans for more in the future.

Model Evaluation helps customers assess, compare, and select

the best model for their application

With the broadest range of industry-leading models, Amazon

Bedrock helps organizations to meet any price, performance, or

capability requirements they may have and allows them to run models

on their own or in combination with others. However, choosing the

best model for a specific use case requires customers to strike a

delicate balance between accuracy and performance. Until now,

organizations needed to spend countless hours analyzing how every

new model can meet their use case, limiting how quickly they could

deliver transformative generative AI experiences to their end

users. Now generally available, Model Evaluation is the fastest way

for organizations to analyze and compare models on Amazon Bedrock,

reducing time from weeks to hours spent evaluating models so they

can bring new applications and experiences to market faster.

Customers can get started quickly by selecting predefined

evaluation criteria (e.g., accuracy and robustness) and uploading

their own dataset or prompt library, or by selecting from built-in,

publicly available resources. For subjective criteria or content

requiring nuanced judgment, Amazon Bedrock makes it easy for

customers to add humans into the workflow to evaluate model

responses based on use-case specific metrics (e.g., relevance,

style, and brand voice). Once the setup process is finished, Amazon

Bedrock runs evaluations and generates a report so customers can

easily understand how the model performed across their key criteria

and quickly select the best models for their use cases.

With Guardrails for Amazon Bedrock, customers can use best in

class technology to easily implement safeguards to remove personal

and sensitive information, profanity, specific words, as well as

block harmful content

For generative AI to be pervasive across every industry,

organizations need to implement it in a safe, trustworthy, and

responsible way. Many models use built-in controls to filter

undesirable and harmful content, but most customers want to further

tailor their generative AI applications so responses remain

relevant, align with company policies, and adhere to responsible AI

principles. Now generally available, Guardrails for Amazon Bedrock

offers industry-leading safety protection on top of the native

capabilities of FMs, helping customers block up to 85% of harmful

content. Guardrails is the only solution offered by a top cloud

provider that allows customers to have built-in and custom

safeguards in a single offering, and it works with all large

language models (LLMs) in Amazon Bedrock, as well as fine-tuned

models. To create a guardrail, customers simply provide a

natural-language description defining the denied topics within the

context of their application. Customers can also configure

thresholds to filter across areas like hate speech, insults,

sexualized language, prompt injection, and violence, as well as

filters to remove any personal and sensitive information,

profanity, or specific blocked words. Guardrails for Amazon Bedrock

empowers customers to innovate quickly and safely by providing a

consistent user experience and standardizing safety and privacy

controls across generative AI applications.

More model choice: introducing Amazon Titan Text Embeddings

V2, the general availability of Titan Image Generator, and the

latest models from Cohere and Meta

Exclusive to Amazon Bedrock, Amazon Titan models are created and

pre-trained by AWS on large and diverse datasets for a variety of

use cases, with built-in support for the responsible use of AI.

Today, Amazon Bedrock continues to grow the Amazon Titan family,

giving customers even greater choice and flexibility. Amazon Titan

Text Embeddings V2, which is optimized for working with RAG use

cases, is well suited for a variety of tasks such as information

retrieval, question and answer chatbots, and personalized

recommendations. To augment FM responses with additional data, many

organizations turn to RAG, a popular model-customization technique

where the FM connects to a knowledge source that it can reference

to augment its responses. However, running these operations can be

compute and storage intensive. The new Amazon Titan Text Embeddings

V2 model, launching next week, reduces storage and compute costs,

all while increasing accuracy. It does so by allowing flexible

embeddings to customers, which reduces overall storage up to 4x,

significantly reducing operational costs, while retaining 97% of

the accuracy for RAG use cases, out-performing other leading

models.

Now, generally available, Amazon Titan Image Generator helps

customers in industries like advertising, ecommerce, and media and

entertainment produce studio-quality images or enhance and edit

existing images, at low cost, using natural language prompts.

Amazon Titan Image Generator also applies an invisible watermark to

all images it creates, helping identify AI-generated images to

promote the safe, secure, and transparent development of AI

technology and helping reduce the spread of disinformation. The

model can also check for the existence of watermark, helping

customers confirm whether an image was generated by Amazon Titan

Image Generator.

Also available today on Amazon Bedrock are Meta Llama 3 FMs and

coming soon are the Command R and Command R+ models from Cohere.

Llama 3 is designed for developers, researchers, and businesses to

build, experiment, and responsibly scale their generative AI ideas.

The Llama 3 models are a collection of pre-trained and instruction

fine-tuned LLMs that support a broad range of use cases. They are

particularly suited for text summarization and classification,

sentiment analysis, language translation, and code generation.

Cohere’s Command R, and Command R+ models are state-of-the-art FMs

customers can use to build enterprise-grade generative AI

applications with advanced RAG capabilities, in 10 languages, to

support their global business operations.

What Amazon Bedrock customers and partners are saying

Built by Amazon, Rufus is a generative AI-powered expert

shopping assistant trained on the company’s extensive product

catalog, customer reviews, community Q&As, and information from

across the web to answer customer questions on a variety of

shopping needs and products, provide comparisons, and make

recommendations based on conversational context. “To offer a

superior conversational shopping experience to Amazon Stores

customers, we worked to develop Rufus into one of the most advanced

models ever created by Amazon, and one we knew would benefit our

customers far beyond this initial application,” said Trishul

Chilimbi, vice president and distinguished scientist, Stores

Foundational AI at Amazon. “With Amazon Bedrock Custom Model

Import, we are now able to bring Rufus’s advanced underlying model

to internal Amazon developers, via Amazon Bedrock, allowing even

more builders across our organization to access it as a fully

managed API. Now, teams in businesses as diverse as Logistics and

Studios are able to build with this model while benefiting from

Amazon Bedrock’s streamlined development experience, accelerating

the creation of new experiences for all of our customers across

Amazon.”

Aha! is a software company that helps more than 1 million people

bring their product strategy to life. “Our customers depend on us

every day to set goals, collect customer feedback, and create

visual roadmaps,” said Dr. Chris Waters, co-founder and chief

technology officer at Aha! “That is why we use Amazon Bedrock to

power many of our generative AI capabilities. Amazon Bedrock

provides responsible AI features, which enable us to have full

control over our information through its data protection and

privacy policies, and block harmful content through Guardrails for

Bedrock. We just built on it to help product managers discover

insights by analyzing feedback submitted by their customers. This

is just the beginning. We will continue to build on advanced AWS

technology to help product development teams everywhere prioritize

what to build next with confidence.”

Dentsu is one of the world's largest providers of integrated

marketing and technology. “Over the past three months, we’ve been

using the Amazon Titan Image Generator model in preview to generate

realistic, studio-quality images in large volumes, using natural

language prompts, specifically for product placement and brand

aligned image generation,” said James Thomas, global head of

technology, Dentsu Creative. “Our creative teams are impressed by

Titan Image Generator's diverse content outputs which have helped

generate compelling images for product placement campaigns around

the globe. We are looking forward to experimenting with the model's

new watermark detection feature to help increase transparency

around our AI-generated content and build even greater trust with

our clients.”

Pearson is a leading learning company, serving customers in

nearly 200 countries with digital content, assessments,

qualifications, and data. “We added Amazon Titan Image Generator to

our content management platform because of the quality of the model

along with the powerful security and indemnity protection it

offers,” said Eliot Pikoulis, chief technology officer, Core

Platforms at Pearson. “We’re impressed with Titan Image Generator’s

ability to create stunning visuals from simple text descriptions,

empowering our diverse team of designers, marketers, and content

creators to bring their ideas to life with ease and speed. For us,

Titan Image Generator is not just a tool but a catalyst for

creativity, which allows us to create compelling course material

for our learners in an easy and secure way.”

Salesforce, the #1 AI CRM, empowers companies to connect with

their customers through the power of CRM + Data + AI + Trust. “AI

is an integral part of our commitment to help our customers deliver

personalized experiences across their Salesforce applications

grounded in their Data Cloud data. As we integrate generative AI

and enable customers to deliver grounded AI on their unified data,

we want to evaluate all possible foundation models to ensure the

ones we choose are the right fit for our customers’ needs,” said

Kaushal Kurapati, senior vice president of AI Products at

Salesforce. “Amazon Bedrock is a key part of our open ecosystem

approach to models, and this new model evaluation capability has

the potential to expedite how we compare and select models, with

both automated and human evaluation options. Now, not only will we

be able to assess the model on straightforward criteria, but also

more qualitative criteria like friendliness, style, and brand

relevance. With the enhanced productivity that the capability

promises, operationalizing models for our customers will be easier

and faster than ever.”

About Amazon Web Services

Since 2006, Amazon Web Services has been the world’s most

comprehensive and broadly adopted cloud. AWS has been continually

expanding its services to support virtually any workload, and it

now has more than 240 fully featured services for compute, storage,

databases, networking, analytics, machine learning and artificial

intelligence (AI), Internet of Things (IoT), mobile, security,

hybrid, media, and application development, deployment, and

management from 105 Availability Zones within 33 geographic

regions, with announced plans for 18 more Availability Zones and

six more AWS Regions in Malaysia, Mexico, New Zealand, the Kingdom

of Saudi Arabia, Thailand, and the AWS European Sovereign Cloud.

Millions of customers—including the fastest-growing startups,

largest enterprises, and leading government agencies—trust AWS to

power their infrastructure, become more agile, and lower costs. To

learn more about AWS, visit aws.amazon.com.

About Amazon

Amazon is guided by four principles: customer obsession rather

than competitor focus, passion for invention, commitment to

operational excellence, and long-term thinking. Amazon strives to

be Earth’s Most Customer-Centric Company, Earth’s Best Employer,

and Earth’s Safest Place to Work. Customer reviews, 1-Click

shopping, personalized recommendations, Prime, Fulfillment by

Amazon, AWS, Kindle Direct Publishing, Kindle, Career Choice, Fire

tablets, Fire TV, Amazon Echo, Alexa, Just Walk Out technology,

Amazon Studios, and The Climate Pledge are some of the things

pioneered by Amazon. For more information, visit amazon.com/about

and follow @AmazonNews.

Source: Amazon Web Services, Inc.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20240423839238/en/

Amazon.com, Inc.

Media Hotline

Amazon-pr@amazon.com

www.amazon.com/pr

Amazon.com (NASDAQ:AMZN)

Historical Stock Chart

From Mar 2024 to Apr 2024

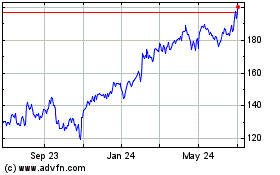

Amazon.com (NASDAQ:AMZN)

Historical Stock Chart

From Apr 2023 to Apr 2024